It is a great pleasure to share me along with my organization Brain Station 23 has been selected for the STP (Seed Transformation Program) of Stanford University with a duration of one year. I had a dream to study in both MIT and Stanford. Last October I could join Innovation leadership program where our team became champion. This time it’s Stanford. Alhamdulillah.

Session on Building Global Scale Cloud Native SaaS Application

It was an interesting session with tech entrepreneurs and senior tech guys at Basis Auditorium. BASIS (https://basis.org.bd/) is the Software association of Bangladesh.

Me along with Zaman Bhai of AWS tried to share different aspect in building cloud native SaaS solution in both global and local aspect. Different discussion points were helpful for everyone related to the current trend. We also discussed about different challenges and potential solutions.

DevOps for Bangladeshi Fintech organization

Fintech is highly regulated segment in every country. Bangladeshi fintech is also the same which becomes a major constraints in leveraging latest technology capacity. One of the top 2 Banks in Bangladesh were also very keen to leverage the cloud service capacity to manage their internal developed projects. In that direction, they wanted to have CI/CD capacity for all of their internal projects. Since all of their resources are in private network capacity, a number of challenges came up which are not applicable for standard environment. I have shared few challenges below:

- No internet access to the environment and they won’t allow the internet for any case in this CI/CD process

- No access to the client’s servers by us.

- On-premise outdated gitlab repo servers

- Their existing deployment process is completely manual

To address the above challenges, we had to align all the activities which are aligned with their compliances/restrictions.

A step by step process has been prepared below:

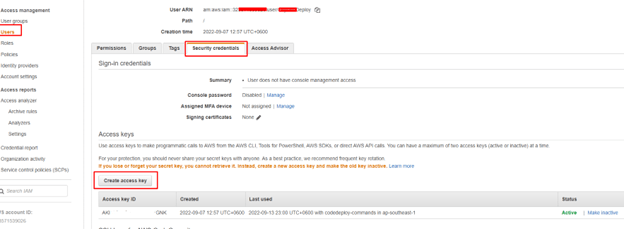

Step 1: Create an IAM user for the on-premises instance.

Create an IAM user that the on-premises instance will use to authenticate and interact with CodeDeploy. No need to apply for any permission at this stage.

Step 2: Assign permissions to the IAM user

Assing the following AWS managed policies to the newly created user.

- AWSCodeDeployFullAccess

- AmazonS3ReadOnlyAccess

Step 3: Create Access Key and download it like below

Step 4: Add a configuration file to the on-premises instance

Add a configuration file to the on-premises instance, using root or administrator permissions. This configuration file will be used to declare the IAM user credentials and the target AWS region to be used for CodeDeploy. The file must be added to a specific location on the on-premises instance. The file must include the IAM user’s ARN, secret key ID, secret access key, and the target AWS region. The file must follow a specific format.

- Create a file named codedeploy.onpremises.yml in the following location on the on-premises instance: /etc/codedeploy-agent/conf

- Use a text editor to add the following information to the newly created codedeploy.onpremises.yml file:

—

aws_access_key_id: secret-key-id

aws_secret_access_key: secret-access-key

iam_user_arn: iam-user-arn

region: supported-region

3. Under the following directory (/etc/codedeploy-agent/conf) edit codedeployagent.yml file and set proxy URL as below

:proxy_uri: https://username:password@proxy-domain-name:proxy-port

Step 5: Install and configure the AWS CLI

- Install AWS CLI from here.

- Configure AWS CLI as below

aws configure

AWS Access Key ID [None]: you access key Id

AWS Secret Access Key [None]: your secret key

Default region name [None]: supported-region

Default output format [None]: json

Step 6: Set the AWS_REGION environment variable

export AWS_REGION=supported-region

Step 7: Install the CodeDeploy agent

Install the CodeDeploy agent on the on-premises instance:

For ubuntu:

sudo apt-get update

sudo apt-get install ruby2.0 (Ubuntu Server 14.04) or sudo apt install ruby-full (16.04 or later).

sudo apt-get install wget

cd /home/{username}

wget https://aws-codedeploy-{region}.s3.amazonaws.com/latest/install

chmod +x ./install

sudo ./install auto (Ubuntu Server 14.04, 16.04, and 18.04)

or sudo ./install auto > /tmp/logfile (for Ubuntu 20.04).

For Amazon Linux or RedHat

sudo yum update

sudo yum install ruby

sudo yum install wget

wget https://aws-codedeploy-{region}.s3.amazonaws.com/latest/install

chmod +x ./install

sudo ./install auto

Step 8: Register the on-premises instance with CodeDeploy from your local machine.

You need to install and configure AWS CLI on your local machine and your user must have the required permission to register the on-premises instance and tag it.

Find out the ARN of the user you have created in step 1 and register the deploy using the following command:

aws deploy register-on-premises-instance –instance-name AssetTag12010298EX –iam-user-arn arn:aws:iam::444455556666:user/CodeDeployUsername

Step 9: Tag the on-premises instance

You can use either the AWS CLI or the CodeDeploy console to tag the on-premises instance. (CodeDeploy uses on-premises instance tags to identify the deployment targets during a deployment.)

If you want to register from the CLI, use the following command

aws deploy add-tags-to-on-premises-instances –instance-names AssetTag12010298EX –tags Key=Name,Value=CodeDeployDemo-OnPrem

Step 11: Track deployments to the on-premises instance

After you deploy an application revision to registered and tagged on-premises instances, you can track the deployment’s progress.

Networking

Client needs to ensure the following:

- Client’s internal Gitlab server can access AWS over site-to-site VPN

- Target deployment server (where code deploy agent will be installed) can access AWS over site-to-site VPN

- Target deployment server (where code deploy agent will be installed) can access proxy server hosted on AWS (IP: 10.35.5.30, PORT: 3128) over site-to-site VPN

- Internet access on Target deployment server to install code deploy agent and AWS CLI

VPC configuration:

- Configure VPC named as ‘VPC The CBL’ taking IP address block from Client On-premise end. IP address 10.35.5.0/24

- Create 4 subnets in two Availability zones and define their IP address.

- VPC The CBL – Private – AZ1 10.35.5.0/28

- VPC The CBL – Public – AZ1 10.35.5.16/28

- VPC The CBL – Private – AZ2 10.35.5.32/28

- VPC The CBL – Public – AZ2 10.35.5.48/28

- The default route table defined and renamed as private route table and associate it to all private(two) subnets.

- VPC The CBL – Private – AZ1 10.35.5.0/28

- VPC The CBL – Private – AZ2 10.35.5.32/28

- Create public route table and associate it to all public subnets

- VPC The CBL – Public – AZ1 10.35.5.16/28

- VPC The CBL – Public – AZ2 10.35.5.48/28

- Create Internet gateway (IGW) attached to VPC

- Add a route in public route table for destination 0.0.0.0/0 select target IGW.

AWS to The Client On-premise Site-to-Site VPN configuration:

- Create file for VPN checklist and take input from BS23 and The Client On-premise end.

- Create Customer gateway named as The CBL CGW with IP address 103.29.105.4.

- Create Virtual Private gateway named as The CBL VGW and attached to VPC The CBL.

- Configure Site-To-Site VPN name as AWS-To-The CBL using bellow information:

- Target gateway type –> Virtual private gateway–>The CBL VGW

- Customer gateway–> Existing—> The CBL CGW

- Routing options —>Static and add Static IP prefixes —> 192.168.98.16/28

- Local IPv4 network CIDR –> 192.168.98.16/28

- Remote IPv4 network CIDR–> 10.35.5.0/24

- Download the configuration file for Cisco Firepower ikev1 and share it with the client Team.

- Enable route propagation for both private and public subnets.

Configure VPC endpoint for accessing AWS CodeCommit :

- Create endpoint as

- Name tag: AWS CodeCommit

- Service category: AWS services

- Filter Services: com.amazonaws.ap-southeast-1.git-codecommit

- Select VPC: The CBL VGW

- Select private Subnets:

- ap-southeast-1a (apse1-az1)

- ap-southeast-1b (apse1-az2)

- IP address type: IPv4

- Security groups: AWS CodeCommit configured as all inbound and outbound traffic allow.

- From Additional settings

- Select: Enable DNS name

- DNS record IP type: IPv4

- Policy: full access.

VPC endpoint for AWS CodeCommit: vpce-07bec13f9640d9eee-yrnxfw8a.git-codecommit.ap-southeast-1.vpce.amazonaws.com

Launch an EC2 instance for creating proxy server:

Install Squid and configure Proxy server:

- Install Squid.

root@prox:~# apt -y install squid

- This is common forward proxy settings.

root@prox:~# vi /etc/squid/squid.conf

- acl CONNECT method CONNECT

# line 1209: add (define ACL for internal network)

acl my_localnet src 192.168.98.16/28

- # line 1397: uncomment

http_access deny to_localhost

- # line 1408: comment out and add the line (apply ACL for internal network)

#http_access allow localhost

http_access allow my_localnet

- # line 5611: add

request_header_access Referer deny all

request_header_access X-Forwarded-For deny all

request_header_access Via deny all

request_header_access Cache-Control deny all

- # line 8264: add

# forwarded_for on

forwarded_for off

- root@prox:~# systemctl restart squid

Set Basic Authentication and limit Squid for users to require authentication.

- Install a package which includes htpasswd.

root@prox:~# apt -y install apache2-utils

- Configure Squid to set Basic Authentication.

root@prox:~# vi /etc/squid/squid.conf

- acl CONNECT method CONNECT

# line 1209: add follows for Basic auth

auth_param basic program /usr/lib/squid/basic_ncsa_auth /etc/squid/.htpasswd

auth_param basic children 5

auth_param basic realm Squid Basic Authentication

auth_param basic credentialsttl 5 hours

acl password proxy_auth REQUIRED

http_access allow password

- root@prox:~# systemctl restart squid

- # add a user : create a new file with [-c] option

root@prox:~# htpasswd -c /etc/squid/.htpasswd bs23

New password: # set password

Re-type new password:

Adding password for user bs23

Proxy server information:

IP: 10.35.X.X

port: 3128

user: username

pass: password

The above process helped the client to have better efficiency and predictable flow in their DevOps process.

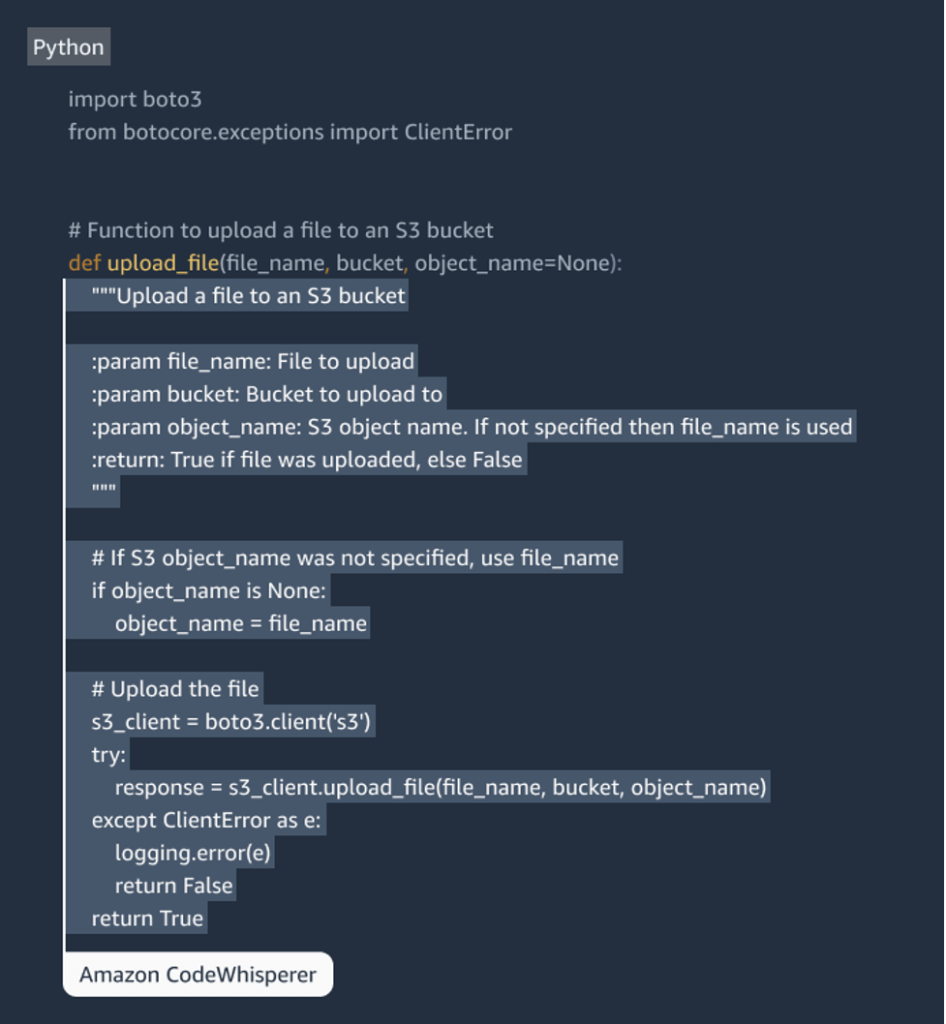

AI-coding assistant: Amazon CodeWhisperer

There has been major disruption in technology and software engineering segment for last several years. Different tech giants are trying to come up with different solutions to make the life easier. We are seeing lots of low code/no code platform like Amazon Honeycode, Google AppSheet, Assembla, Appfarm etc. now which can be leveraged with very minimum/no coding expertise in building different business solution. Potentially we might see dynamic shift in coming days.

We all know about Github Copilot which works as AI-coding assistant. Many developers have already adopted this for their development process. Now AWS came up with Amazon CodeWhisperer to provide the similar or better kind of service.

For instance, if you want to write a code in python and comment “Function to upload a code in S3 bucket”, CodeWhisperer will assist with the required code like below:

According to Amazon, this will not only assist with the coding assistance but also consider the followings as well:

- It will keep your coding style into consideration:

CodeWhisperer automatically analyses the comment, determines which cloud services and public libraries are best suited for the specified task, and recommends a code snippet directly in the source code editor. The recommendations are synthesized based on your coding style and variable names and are not simply snippets - Security is considered:

CodeWhisperer keeps security as a priority, too, claims Amazon. It provides security scans for Java and Python to help developers detect vulnerabilities in their projects and build applications responsibly. It also includes a reference tracker that detects whether a code recommendation might be similar to particular training data. Developers can then easily find and review the code example and decide whether to use the code in their project.

More details can be found at https://aws.amazon.com/codewhisperer/

If anyone wants to preview Amazon Whisperer, need to signup at https://pages.awscloud.com/codewhisperer-sign-up-form.html

We might see that there is minimum/no entry barrier to become a development engineer for many cases in coming days.

AWS OutPosts: AWS Services On-Premises

We see the possibility of having AWS Outposts in Bangladesh. This will potentially be a great advantage for highly regulated industries where there is restrictions in having the data out of the country and need very low latency. This will encourage more to the hybrid cloud by different companies. Considering that, a short presentation deck shared below :

Sprint Based Decision Making

As a team, we always come up with different challenges. Without précised and directional discussion, we fail to find proper solution to that. Sprint based decision making can help here to find an optimized solution participated by all the team members which reduces the unstructured discussion.

The steps are

- Start with Problems — 7 MINS

- Present Problems — 4 MIN PER PERSON

- Select Problems to Solve— 6 MIN

- Reframe Problems as Standardised Challenges — 6 MIN

- Produce Solutions — 7 MIN

- Vote on Solutions — 10 MINS

- Prioritise Solutions -30 Seconds

- Decide what to execute on — 10 MINS

- Turn Solutions into Actionable Tasks — 5 MINS

Jonathan has shared it beautifully in the following link. I am confident that it would be really helpful for many of us.

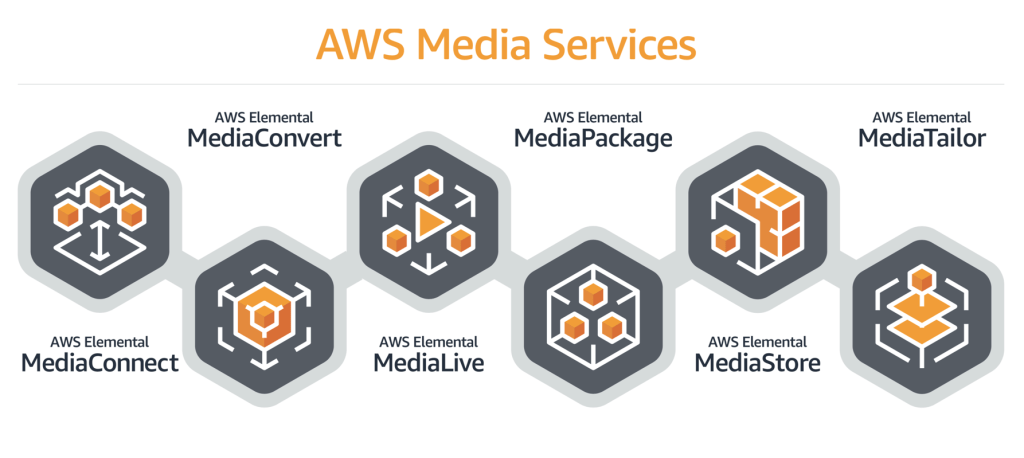

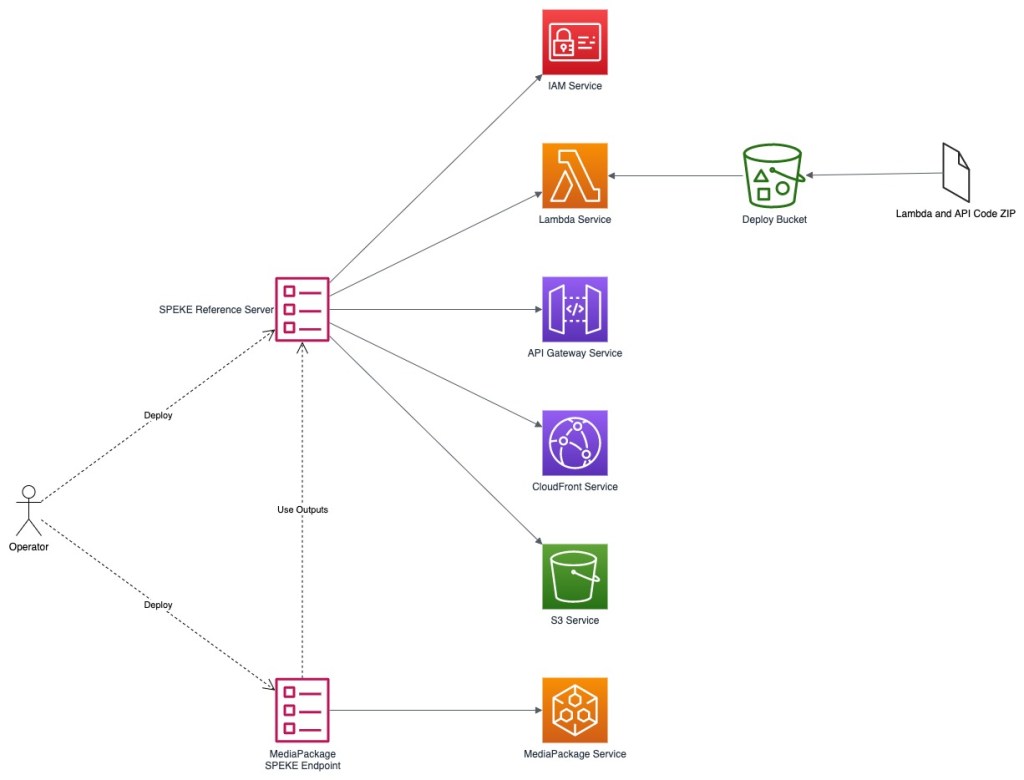

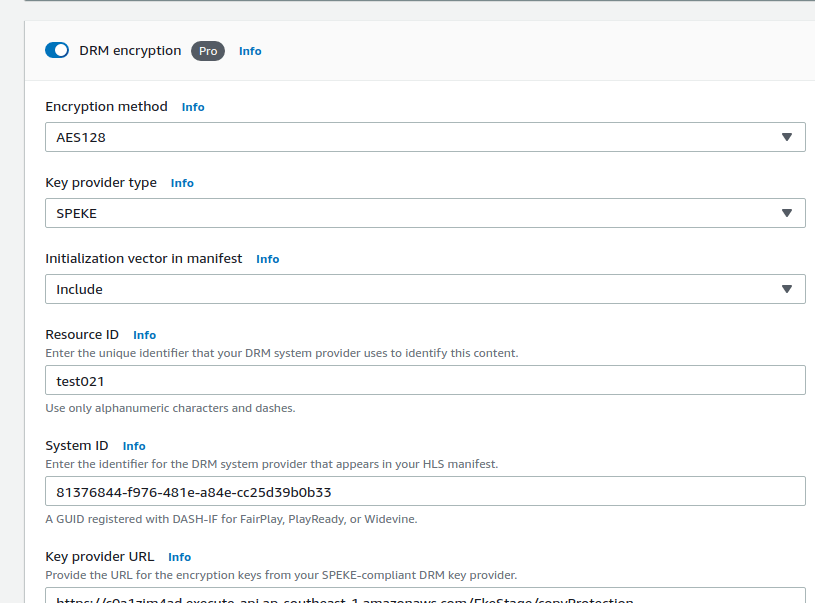

OTT Platform with Speke reference server through AWS Elemental

Business Context and Problem Statement

We see overall revenue is declining in voice call for different telco companies. People are using different freely available VoIP solutions like WhatsApp, Viber for both voice and text based communication. To have different revenue stream, companies are focusing on different digital services. In that consideration, one of the top telco service providers in Bangladesh has initiated an OTT platform for their subscriber. All the streams of the platform were unencrypted which was a major concern for them. They wanted to have a secure and protected live streams to their end users which is encrypted and the key is rotated every 5 minutes with an optimized costing. As one of the top AWS solution providers, they came to us how we can help here to achieve their goal.

To provide the required solution, we looked for different available solutions like BuyDRM (https://buydrm.com/) , Intertrust (https://www.expressplay.com/) heavily used by industry leaders but the subscription fee was bit expensive which was not aligned with our client. Considering all the aspect, we started looking for opensource free solutions to be customized and Speke reference server came up to be used with AWS Elemental service to serve the purpose.

We can find the opensource repo of the Speke reference server from the following link:

https://github.com/awslabs/speke-reference-server

The Speke reference server solution is done in Python. After digging further, we found a AWS Cloud formation template was based on python 3.6 which is not supported with latest lambda python version (3.9) and a number of used libraries were deprecated in latest version. We stared doing the refactoring to comply the code with python 3.9 which can be found in the following link.

To support python 3.9, the codebase was also refactored shared below:

After deploying the cloud formation, we need to upload the above code base to function the Speke reference server properly.

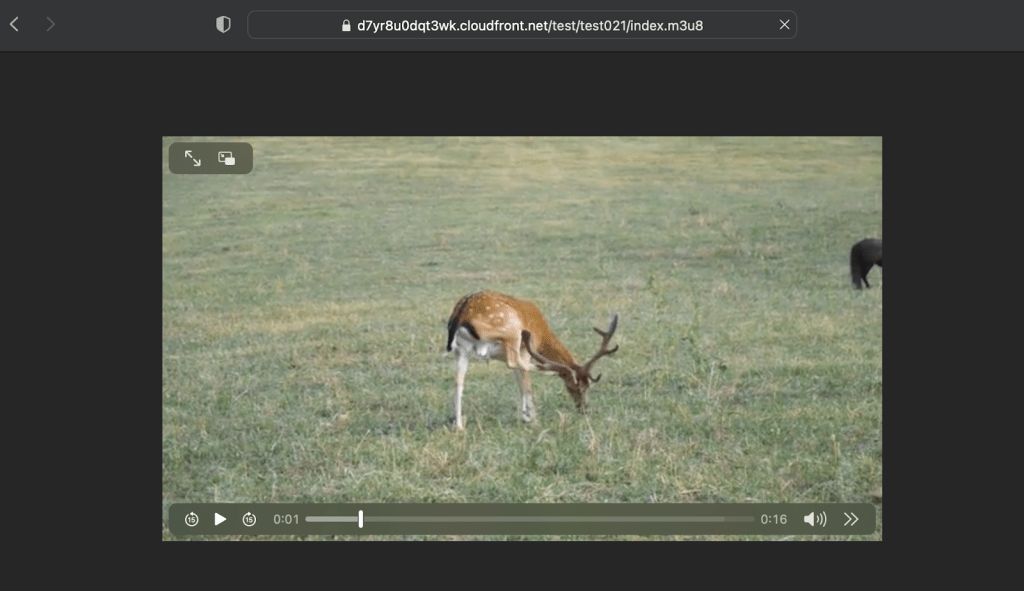

After successful implementation, the flow becomes:

The flow is:

Input: <s3-bucket>/<source_folder>/<source_file_name>

DRM:

Output: <s3-bucket>/<output_folder>/<output_file.index.m3u8++>

That S3 has a CloudFront distribution, in this case:

Demo Link: https://yourcloudfrontURL/test/test021/index.m3u8

We ran some tests playing the content on VLC, QuickTime, and Safari.

If we try to read the m3u8 file:

$ curl https://yourcloudfrontURL/test/test021/index.m3u8

#EXTM3U

#EXT-X-VERSION:3

#EXT-X-INDEPENDENT-SEGMENTS

#EXT-X-STREAM-INF:BANDWIDTH=1610383,AVERAGE-BANDWIDTH=1610383,CODECS=”avc1.77.30,mp4a.40.2″,RESOLUTION=640×360,FRAME-RATE=23.976

indextest021.m3u8

$ curl https://d7yr8u0dqt3wk.cloudfront.net/test/test021/indextest021.m3u8

#EXTM3U

#EXT-X-VERSION:3

#EXT-X-TARGETDURATION:11

#EXT-X-MEDIA-SEQUENCE:1

#EXT-X-PLAYLIST-TYPE:VOD

#EXT-X-KEY:METHOD=AES-128,URI=”https://d3f9ggqez0f75l.cloudfront.net/test021/78746cf8-58aa-4dc7-810f-10ed3daecf4e”,IV=0x00000000000000000000000000000001

#EXTINF:10,

indextest021_00001.ts

#EXT-X-KEY:METHOD=AES-128,URI=”https://d3f9ggqez0f75l.cloudfront.net/test021/78746cf8-58aa-4dc7-810f-10ed3daecf4e”,IV=0x00000000000000000000000000000002

#EXTINF:6,

indextest021_00002.ts

#EXT-X-ENDLIST

Here, we have a Key URI: https://yourcloudfrontURL/test021/78746cf8-58aa-4dc7-810f-10ed3daecf4e

This is a key pointing to an S3 Bucket origin. Every time we convert content through MediaConvert and give a ResourceID, it creates a key with that resource ID on the bucket.

The above POC was aligned with the need. Right now, their existing solution is in process to be refactored leveraging AWS Services, Speke reference server and other relevant technology stakes.

Cloudemy

I have been thinking about an online platform which can help the cloud enthusiast in kicking off the the cloud journey. To serve that, cloudemy.xyz domain has been registered. All the courses will be in localized language (Bangla). The development of the platform is in progress. Expected date of release is 1st August 2022. A place holder is there for now till the platform is released. Expecting to create an significant impact in cloud adaptability over here in Bangladesh.

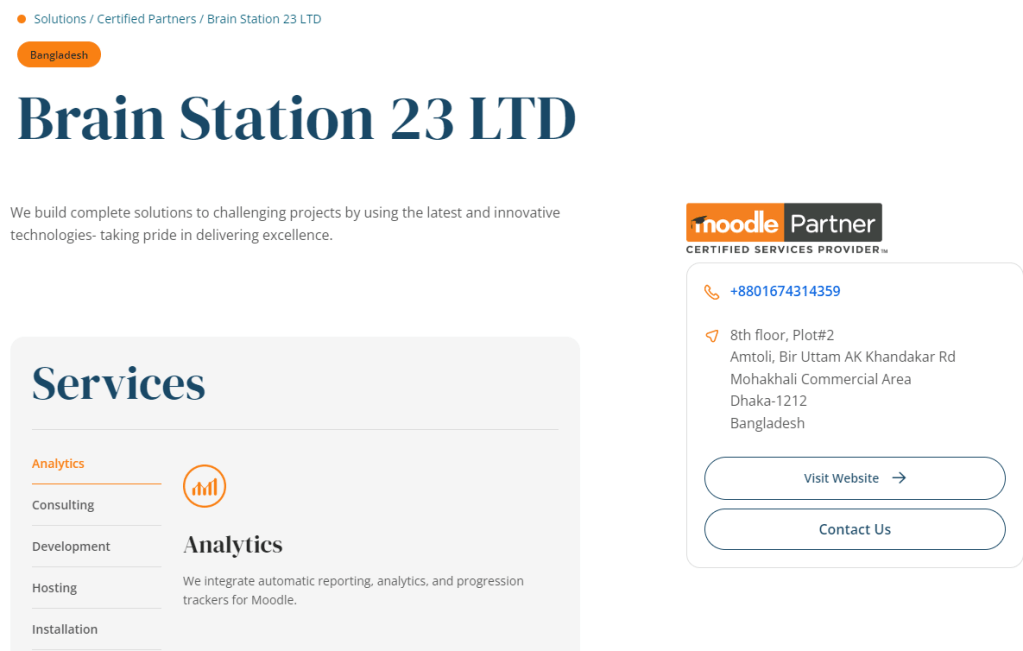

Moodle LMS in AWS

Brain Station 23 is the only solution partner of Moodle in Bangladesh. We have been working with both local and global clients in development and consultancy capacity.

Regarding deploying Moodle in AWS, a recommended architecture given below which can ensure high availability:

AWS User Group Bangladesh reached 6K+ members

AWS User Group Bangladesh (https://www.facebook.com/groups/AWSBangladesh) was created in both Facebook and LinkedIn to have a strong community of AWS enthusiast. It was established more than 5 years ago and new members are joining to support each other. I am the co-founder of this group and community leader. We have a vision to play a key role in adopting cloud over here. We have been arranging lots of workshop, seminar all these years. Before pandemic, it was all physical event, now we are more into virtual event considering the participants safety. We are continuously contributing in solving different problems by the community members to strengthen the cloud adaptation.